MuMMER

Description

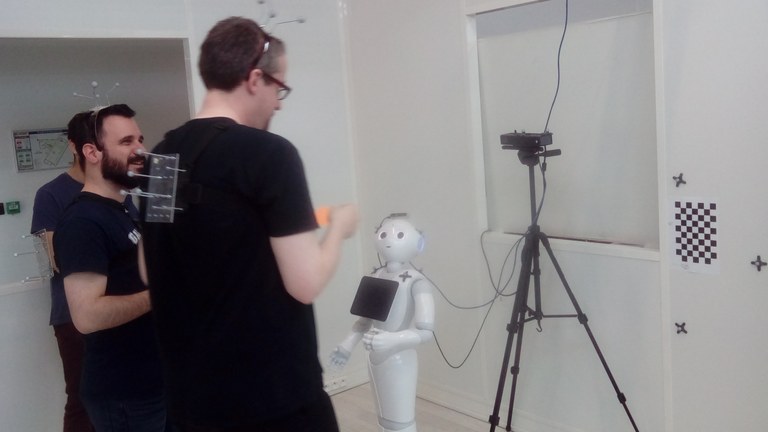

In the frame of the MuMMER project, we collected the MuMMER data set a data set for human-robot interaction scenarios which is available for research purposes. It comprises 1 h 29 min of multimodal recordings of people interacting with the social robot Pepper in entertainment scenarios, such as quiz, chat, and route guidance. In the 33 clips (of 1 to 4 min long) recorded from the robot point of view, the participants are interacting with the robot in an unconstrained manner.

The data set exhibits interesting features and difficulties, such as people leaving the field of view, robot moving (head rotation with embedded camera in the head), different illumination conditions. The data set contains color and depth videos from a Kinect v2, an Intel D435, and the video from Pepper 1.7.

All the visual faces and the identities in the data set were manually annotated, making the identities consistent across time and clips. The goal of the data set is to evaluate perception algorithms in multi-party human/robot interaction, in particular the reidentification part when a track is lost, as this ability is crucial for keeping the dialog history. The data set can easily be extended with other types of annotations.

|

|

|

|

| The scene | View from the Kinect | View from the Intel D435 | View from the robot |

Statistics

To properly benchmark tracking and perception algorithms, we have annotated all the faces and identities that appear in the three color video streams. All identities are consistent between frames, sequences, and sensors.

Figure 1: Example of annotations in the 3 views: Kinect, Intel, Pepper

| Feature | Value |

|---|---|

| Number of participants | 28 |

| Number of protagonists | 22 |

| Number of clips | 33 |

| Shortest clip | 1 min 6 s |

| Longest clip | 5 min 6 s |

| Total duration | 1 h 29 min |

| Maximum number of persons in one frame | 9 |

| Kinect color frames | 80,488 |

| Kinect depth frames | 80,865 |

| Intel color frames | 80,346 |

| Intel depth frames | 80,310 |

| Robot color frames | 47,023 |

| Robot depth frames | 23,450 |

| Number of annotated faces | 506,713 |

Evaluation

The output of a tracker system can be evaluated in the same way as for the MOT challenge, using MATLAB code or Python code.

We provide a baseline system (code available for research soon).

Samples

These videos shows 2 examples of color videos in which the images of the 3 sensors were concatenated (from left to right: a Kinect v2, Intel D435, Pepper 1.7.). Faces are blurred and audio is removed for privacy issues (distributed data contains raw data, not blurred, and with audio).

An easy sequence (only 2 protagonists, no passerby)

A hard sequence (3 protagonists, multiple passerby)