EYEDIAP

Database description | Session description | Evaluation | Publications | FAQs

|

The lack of a common benchmark for the evaluation of the gaze estimation task from RGB and RGB-D data is a serious limitation for distinguishing the advantages and disadvantages of the many proposed algorithms found in the literature. The EYEDIAP dataset intends to fill the need for a standard database for gaze estimation from remote RGB, and RGB-D (standard vision and depth), cameras. The recording methodology was designed such that we systematically include, and isolate, most of the variables which affect the remote gaze estimation algorithms:

Some pre-defined benchmarks are provided to evaluate each one of these aspects in an independent manner, and the data was preprocessed to extract and provide complementary observations (e.g. head pose).

|

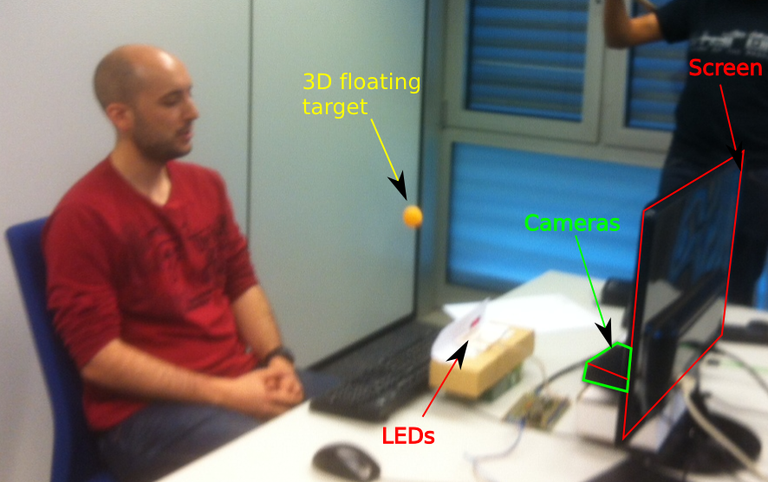

The recording setup is as shown in the image below: |

Set-upThe set-up is composed of the following elements:

Data per sessionAt each session folder, the following files can be found:

In total, the EYEDIAP database is composed of 94 sessions. For a list of the sessions, go here. |

|

Documentation

For more details on the processing methodology and data interpretation, please refer to this document:

Funes Mora, K. A., Monay, F., and Odobez, J.-M. 2014. EYEDIAP database: Data description and gaze tracking evaluation benchmarks. Tech. Rep. RR-08-2014, Idiap, May 2014.

which you can find > here <.

Each recording session is of a combination of the following parameters:

- Participants. We have recorded 16 people: 12 male and 4 female.

- Recording conditions. For participant 14, 15 and 16, some sessions were recorded twice, in two different conditions (denoted A or B): different day, illumination and distance to the camera.

- Visual Target. It is the object which the participant was requested to gaze at. To be representative of different applications, we included the following cases: Discrete screen target (DS), where a small circle was uniformly drawn every 1.1 seconds on random locations in the computer screen; Continuous screen target (CS), in which the circle was programmed to move along a random trajectory for 2s, to obtain examples with smoother gaze movement; 3D floating target (FT): a ball with a 4cm diameter hanging from a thin thread attached to a stick that was moved within a 3D region between the camera and the participant. In contrast to the screen target, the participant was at a larger distance (1.2m instead of 80-90cm) from the camera to allow sufficient space for the target to move.

- Head pose. To evaluate methods in terms of robustness to head pose, we asked participants to keep gazing at the visual target while (i) keeping an approximately static head pose facing towards the screen (Static case, S); or (ii) performing head movements (translation and rotation) to introduce head pose variations (Mobile case, M).

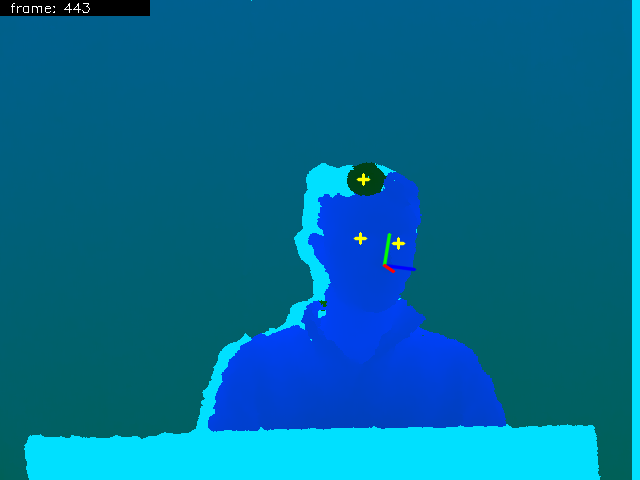

Visualization

To inspect the different sessions please run the following command within the "Scripts" folder:

python view_session.py <session_idx>

Where session_idx is the session index ( [0, 93] ). By browsing through the code the user can find how to interpret the per session meta-data.

Sessions list

Each session is denoted by the string "P-C-T-H" which refers to the participant P=(1-16), the recording conditions C=(A or B), the target T=(DS, CS or FT) and the head pose H=(S or M) respectively. The 94 provided sessions are the following:

| Session index | Participant | Conditions | Visual target | Head pose |

|---|---|---|---|---|

| 0 | 1 | A | DS | S |

| 1 | 1 | A | DS | M |

| 2 | 1 | A | CS | S |

| 3 | 1 | A | CS | M |

| 4 | 1 | A | FT | S |

| 5 | 1 | A | FT | M |

| 6 | 2 | A | DS | S |

| 7 | 2 | A | DS | M |

| 8 | 2 | A | CS | S |

| 9 | 2 | A | CS | M |

| 10 | 2 | A | FT | S |

| 11 | 2 | A | FT | M |

| 12 | 3 | A | DS | S |

| 13 | 3 | A | DS | M |

| 14 | 3 | A | CS | S |

| 15 | 3 | A | CS | M |

| 16 | 3 | A | FT | S |

| 17 | 3 | A | FT | M |

| 18 | 4 | A | DS | S |

| 19 | 4 | A | DS | M |

| 20 | 4 | A | CS | S |

| 21 | 4 | A | CS | M |

| 22 | 4 | A | FT | S |

| 23 | 4 | A | FT | M |

| 24 | 5 | A | DS | S |

| 25 | 5 | A | DS | M |

| 26 | 5 | A | CS | S |

| 27 | 5 | A | CS | M |

| 28 | 5 | A | FT | S |

| 29 | 5 | A | FT | M |

| 30 | 6 | A | DS | S |

| 31 | 6 | A | DS | M |

| 32 | 6 | A | CS | S |

| 33 | 6 | A | CS | M |

| 34 | 6 | A | FT | S |

| 35 | 6 | A | FT | M |

| 36 | 7 | A | DS | S |

| 37 | 7 | A | DS | M |

| 38 | 7 | A | CS | S |

| 39 | 7 | A | CS | M |

| 40 | 7 | A | FT | S |

| 41 | 7 | A | FT | M |

| 42 | 8 | A | DS | S |

| 43 | 8 | A | DS | M |

| 44 | 8 | A | CS | S |

| 45 | 8 | A | CS | M |

| 46 | 8 | A | FT | S |

| 47 | 8 | A | FT | M |

| 48 | 9 | A | DS | S |

| 49 | 9 | A | DS | M |

| 50 | 9 | A | CS | S |

| 51 | 9 | A | CS | M |

| 52 | 9 | A | FT | S |

| 53 | 9 | A | FT | M |

| 54 | 10 | A | DS | S |

| 55 | 10 | A | DS | M |

| 56 | 10 | A | CS | S |

| 57 | 10 | A | CS | M |

| 58 | 10 | A | FT | S |

| 59 | 10 | A | FT | M |

| 60 | 11 | A | DS | S |

| 61 | 11 | A | DS | M |

| 62 | 11 | A | CS | S |

| 63 | 11 | A | CS | M |

| 64 | 11 | A | FT | S |

| 65 | 11 | A | FT | M |

| 66 | 12 | B | FT | S |

| 67 | 12 | B | FT | M |

| 68 | 13 | B | FT | S |

| 69 | 13 | B | FT | M |

| 70 | 14 | A | DS | S |

| 71 | 14 | A | DS | M |

| 72 | 14 | A | CS | S |

| 73 | 14 | A | CS | M |

| 74 | 14 | A | FT | S |

| 75 | 14 | A | FT | M |

| 76 | 14 | B | FT | S |

| 77 | 14 | B | FT | M |

| 78 | 15 | A | DS | S |

| 79 | 15 | A | DS | M |

| 80 | 15 | A | CS | S |

| 81 | 15 | A | CS | M |

| 82 | 15 | A | FT | S |

| 83 | 15 | A | FT | M |

| 84 | 15 | B | FT | S |

| 85 | 15 | B | FT | M |

| 86 | 16 | A | DS | S |

| 87 | 16 | A | DS | M |

| 88 | 16 | A | CS | S |

| 89 | 16 | A | CS | M |

| 90 | 16 | A | FT | S |

| 91 | 16 | A | FT | M |

| 92 | 16 | B | FT | S |

| 93 | 16 | B | FT | M |

If you have used this database and you would like your results to appear here, please send an email to kenneth.funes@idiap.ch

EYEDIAP experiment

The gaze tracking results reported in our ETRA 2014 paper are provided within the "Example" folder.

To recompute the reported metrics, such as the gaze estimation accuracy, please run the following command:

python compute_etra_results.py

within the "Scripts" folder.

Reference

If you use this dataset, please cite the following paper:

10.1145/2578153.2578190

Please also refer to our technical report for a extended description of the dataset, which you can find > here <.

FAQ

Is there something wrong with the 3D coordinates given in the screen_coordinates.txt file?

Is there some sample code available?

Within the database distribution we provide a couple of sample scripts to visualise the given data.

What about some sample code for 3D processing?

Yes! Have a look at the RGBD python processing module. It is fully compatible with the EYEDIAP database and it should be easy to start 3D rendering the database.

Is there detailed documentation available?

Definitely. Have a look at the >tech report<