AIM (Age Induced Makeup) Dataset

# Dataset Description

The Age Induced Makeup (AIM) dataset consists of presentation attacks in the form of age progressive makeups. The identities comprise of male and female subjects from various ethnicities. Professional artists have created varying degrees of facial makeups to generate an old-age appearance. The dataset has been created for experiments related to makeup-based presentation attacks on face recognition systems. The original (grayscale) version of the AIM dataset was released in 2019. This data was supplemented with RGB version in 2024.

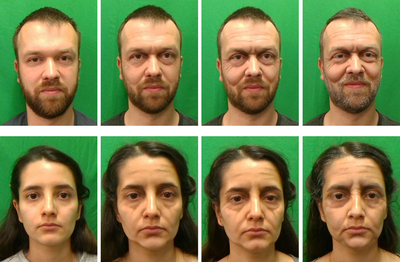

Samples of age-induced makeup with different levels of makeup intensity from AIM dataset.

For each row, the left image is bona-fide, and intensity of makeup increases from left to right.

The AIM dataset was originally curated to evaluate the vulnerability of face recognition systems against age-induced makeup attacks, followed by developing a detection mechanism (anti-spoofing related to makeups). The original version of dataset, released in 2019, and the corresponding publication, focused on a convolutional neural network (CNN) that required a single-channel (grayscale) version of the face images for face recognition tasks. To support the community in working with color data, the AIM dataset has been supplemented with an RGB version in 2024.

Details of the grayscale (original) Version

- Face Images: Aligned and cropped to 128 × 128 pixels.

- Channels: 1-channel (gray scale) images.

Details of the RGB Version

The extended version consists of:

- Face Images: Aligned and cropped to 112 × 112 pixels.

- Channels: 3-channel (RGB) images.

- Consistency: The RGB images correspond to the same frames/images from the original grayscale dataset.

Image Processing Details

- Face and Landmark Detection: Detected using MTCNN (Multi-task Cascaded Convolutional Network).

- Face Alignment: After cropping, the face regions are aligned using a 5-point transformation, as applied in the InsightFace repository.

- Image Format: For grayscale version, the images are stored as a 2D matrix HW (height, width). For RGB version, the images are stored in HWC format (height, width, channels), with the channel order being R-G-B.

We have made our best effort to ensure that RGB images (frames from video) align closely with the original grayscale images in terms of content. However, there may be slight mismatches in the exact frames due to variations in python software versions used to process the original video frames.

If you use this dataset, cite the following publication:

@article{Kotwal_TBIOM_2020,

author = {Kotwal, Ketan and Mostaani, Zohreh and Marcel, S\'{e}bastien},

title = {Detection of Age-Induced Makeup Attacks on Face Recognition Systems Using Multi-Layer Deep Features},

journal = {IEEE Transactions on Biometrics, Behavior, and Identity Science},

publisher = {{IEEE}},

year = {2020},

volume = {2},

number = {1},

month = {Jan},

pages = {15--25},

}

Note

- Original (grayscale) Version: The grayscale version of the AIM dataset (128 × 128) was published in 2019.

- RGB Version: The RGB version of the AIM dataset (112 × 112 × 3) was published in 2024.

When using the AIM dataset in your research or publications, ensure that you specify the version of the dataset (grayscale or RGB) to avoid any confusion for readers. Similarly, if you are comparing results or statistics based on the AIM dataset, mention the version (grayscale or RGB) to ensure consistency.

# Structure of AIM Dataset

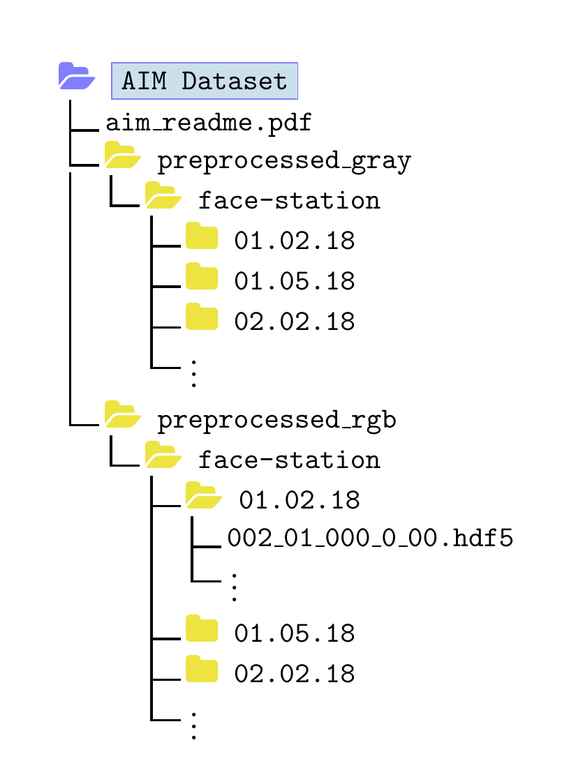

This description refers to the file/ folder structure of the preprocessed (released) dataset. The structure of grayscale and RGB version is same.

Structure of the (preprocessed) AIM Dataset (grayscale and RGB versions).

Similar to the grayscale version, the RGB version is provided in HDF5 format, maintaining the exact same data structure. This ensures easy compatibility with existing pipelines that utilize the grayscale version of the dataset. A sample python code to read the HDF5 file has been provided below.

Reading AIM Data (Images) from HDF5 Format

The Hierarchical Data Format or HDF5, is a versatile container format ideal for storing and managing scientific data, widely used across various operating systems and platforms. Many open-source tools are available for viewing and processing HDF5 files, and popular programming languages such as C, C++, python, and Matlab provide support for reading and writing HDF5 files.

If you are using python, install the h5py package, which provides a simple interface to read and write HDF5 files.

To install h5py via pip or conda:

pip install h5py

or

conda install h5py

The AIM dataset (both grayscale and RGB versions) contains uniformly sampled frames from video sequences. Each video is provides 20 frames, named as Frame_<num>, where num ranges from 0 to 19. These frame names correspond to the groups (or keys) in the HDF5 file.

Example: Reading a Single Frame

To read the 5th frame (i.e., Frame_4) from an HDF5 file:

import h5py

# path to the HDF5 file (make sure the file ends with .hdf5 or .h5)

hdf_file = "<path_to_hdf5_file>"

# specify the key for the frame

key = "Frame_4"

# open the HDF5 file and read the data

with h5py.File(hdf_file, "r") as f:

if key in f.keys():

data = f[key]["array"][()]

else

print(f"Group {key} not found in HDF5 file {hdf_file}.")

# on successful processing

print(data.shape, data.dtype)

>> (128, 128) uint8 # for grayscale version

>> (112, 112, 3) uint8 # for rgb version

Example: Reading all frames and saving as Images

To read all (20) frames from an HDF5 file and save them as png or jpeg images:

import os

import h5py

import matplotlib.pyplot as plt

# path to the HDF5 file and output directory

hdf_file = "<path_to_hdf5_file>"

output_directory = "<path_to_output_directory>"

# open the HDF5 file and process all frames

with h5py.File(hdf_file, "r") as f:

for key in f.keys():

data = f[key]["array"][()]

output_path = os.path.join(output_directory, key + ".png") # or ".jpg"

plt.imsave(output_path, data, vmin=0, vmax=255, cmap=plt.cm.gray)

# Data Collection

The AIM dataset consists of 456 video recordings from both bona fide and presentation attack (PA) videos (each approx 10 s in duration). These videos have been acquired in using the RGB channel of Intel RealSense SR300 camera.

The dataset consists of 240 bona-fide (non-makeup) presentations corresponding to 72 subjects; and 216 attack (age induced makeup) presentations captured from a subset of 20 subjects. For every participant of makeup presentation of AIM, a bona-fide (non-makeup) video is also available.

Makeups were created by professionals using regular makeup materials to compose age-inducing effects like coloring of eyebrows, and creation of wrinkles on cheeks, or forehead. No prosthetic objects or materials were considered.

A complete preprocessed data for the aforementioned videos have been provided to facilitate reproducing experiments from the reference publication, as well as to conduct new experiments. The details of preprocessing can be found in the reference publication. The AIM dataset is a subset of WMCA dataset collected at Idiap Research Institute.

The implementation of all experiments described in the reference publication is available at https://gitlab.idiap.ch/bob/bob.paper.makeup_aim

Experimental Protocols from Reference Publication

The reference publication considers the experimental protocol named grandtest. For a frame-level evaluation, 20 frames from each video have been used. For the grandtest protocol, videos were divided into fixed, disjoint groups: train, dev, and eval. Each group consists of unique subset of subjects. (Subjects of one group are not present in other two).

| Partition | # Videos | # Frames | Split Ratio (%) | Total Frames |

|---|---|---|---|---|

| train bona-fide | 86 | 1720 | 54.43 | 3160 |

| train PA | 72 | 1440 | 45.56 | |

| dev bona-fide | 80 | 1600 | 52.63 | 3040 |

| dev PA | 72 | 1440 | 47.37 | |

| eval bona-fide | 74 | 1480 | 50.68 | 2920 |

| eval PA | 72 | 1440 | 49.32 | |

| Total | 456 | 9120 | 9120 |