DIH: Depth Images with Humans

Introduction

The DIH dataset has been created for human body landmark detection and human pose estimation from depth images. Training and deploying good models for these tasks require large amounts of data with high quality annotations. Unfortunately, obtaining precise manual annotation of depth images with body parts is hampered by the fact that people appear roughly as blobs and the annotation task is very time consuming. Synthesizing images provides an easy way to introduce variability in body pose, view perspective and high quality annotations can be easily generated.

However, synthetic depth images does not match real depth data in several aspects: visual characteristics that arise from the depth image generation, i.e. measurement noise, the most problematically depth discontinuity and missing measurements. Hence, real data with annotations is required to fill the detection performance gap this data mismatch can provoke in real data. With this is mind, the DIH dataset also provides real data with annotations for Kinect 2. This real data can be used for both finetuning and testing.

Dataset

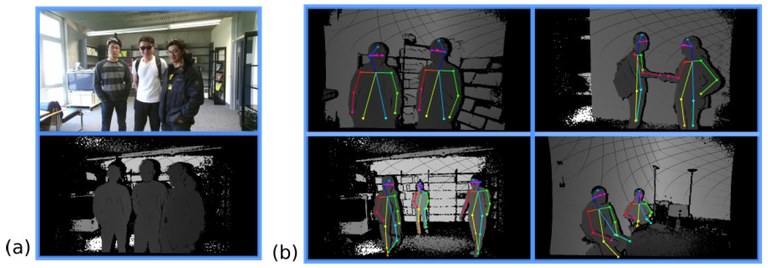

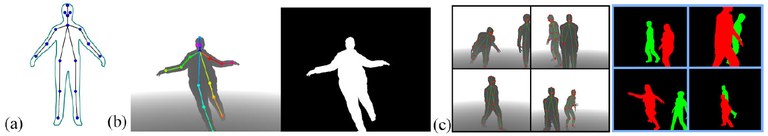

The DIH dataset contains a set of synthetic images and a set of images acquired with a Kinect 2 depth sensor as detailed below. Both dataset contain annotations of 17 body landmarks: head, neck, shoulders, elbows, wrists, hips, knees, ankles and eyes (Figure 1(a)). You are encouraged to see our example code of how to load and visualize the data.

Synthetic Images (S-DIH)

The generated images were created with a pipeline built in Blender. We used 24 3D adult characters created with the Makehuman modelling software. Characters are of both genders and with different heights and weights, and have been dressed with different clothing outfits to increase shape variation (skirts, coats, pullovers, etc).

For each character, we performed motion re-targeting from motion capture data. We relied on the publicly available CMU motion capture database. We have selected a variety of motion capture sequences with people walking, playing sports, standing still, bending, picking up objects, etc. In addition, we considered the scenarios with more than one person by synthesizing images with two 3D characters.

The DIH synthetic dataset contains around 264 K depth images in total with the following contents:

- Synthetic depth images, 16 bits format.

- Binary mask, 8 bits format. For the case of two people, these masks are colored (see Figure 1).

- Annotations

- Body landmarks in image coordinates. See Figure 1(b) and (c).

- Body landmarks in 3D coordinates (camera reference frame).

- Body landmarks visibility flags.

In addition we provide the folds used during our experiments (see reference below)

| FOLD | N of images | Annotations file name |

|---|---|---|

| Training | 230934 | DIH_train2018_annotations_selected.json |

| Validation | 22333 | DIH_val2018_annotations_selected.json |

| Testing | 11165 | DIH_test2018_annotations_smallset.json |

| Validation (subset) | 5000 | DIH_val2018_annotations_smallset.json |

Real Depth Images of Kinect 2 (R-DIH)

We study the case of landmark detection and pose estimation for Human-Robot Interaction (HRI). To this end, we conducted a series of data collection, recording videos of people interacting with a robotic platform.

The data consists of 16 sequences in HRI settings recorded with a Kinect 2. Each sequence has a duration of up to three minutes and is composed of pairs of registered color and depth images. The interactions were performed by 9 different participants in indoor settings under different background scene and natural interaction situations. In addition the participants were asked to wear different clothing accessories to add variability in the body shape.

Each sequence displays up to three people captured at different distance from the sensor. We divided the data taking into account clothing features, actor ID and interaction scenario, in such a way that the same actor does not appear in the training and testing sets under similar circumstances.

The train, validation and test folds consist of 7, 5 and 4 sequences respectively. Refer to file DIH/real/annotations/Sequence-Folds-Definition.txt for more information about the split and sequences ID.

We provide body landmark annotations for a subset of images in the training, validation and testing folds. To annotate body landmarks we first register the syncronized pair of depth and RGB images. Then, we use an implementation of the convolutional pose machines with part affinity fields to obtain a first estimate of the body landmarks in the image. This landmarks are then manually refined. The resultant body landmarks comprise the annotations we use in experiments. The following table summarizes the number of annotated images per fold

| FOLD | N of images | Annotations ile name |

|---|---|---|

| Training | 1750 | DIH_kinect_train2018_annotations_smallset.json |

| Validation | 750 | DIH_kinect_val2018_annotations.json |

| Testing | 1000 | DIH_kinect_test2018_annotations.json |

Code

Annotation files for the synthetic and real data follow the same format to store body landmark annotations. Each entry in the json file contains the following fields

- person_id : person id (only for the synthetic data).

- keypoints : body landmarks in the image coordinate space (2D).

- keypoints_3D : body landmarks in the 3D camera coordinate space (only for synthetic data).

- b_box : bounding box of the person.

References

If you use this data you are required to cite the following papers

@INPROCEEDINGS{Martinez_IROS_2018,

author={Mart\'inez-Gonz\'alez, Angel and Villamizar, Michael and Can{\'e}vet, Olivier and Odobez, Jean-Marc},

booktitle={2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

title={Real-time Convolutional Networks for Depth-based Human Pose Estimation},

year={2018},

volume={},

number={},

pages={41-47},

doi={10.1109/IROS.2018.8593383},

ISSN={2153-0866},

month={Oct},

}

@INPROCEEDINGS{Martinez_ECCVW_2018,

author = {Mart\'inez-Gonz\'alez, Angel and Villamizar, Michael and Can{\'e}vet, Olivier and Odobez, Jean-Marc},

title = {Investigating Depth Domain Adaptation for Efficient Human Pose Estimation},

booktitle = {2018 European Conference on Computer Vision - Workshops, {ECCV} 2018},

month = {September},

year = {2018},

location = {Munich, Germany},

}

Data structure

The data directory has the following structure

DIH

|

|-- code # Sample code for loading and visualizing body landmarks

|-- documentation # DIH documentation files

|-- synthetic

| |--- annotations # JSON files defining annotations for training, testing and validation sets

| |--- depth # Depth images divided into different folders

| |--- mask_paths # Binary masks for all data

| |--- meterials # Masks generated only for images with two people|── real

|-- real

|--- data

|--- sequence_1# recording sequence. normally the name of the folder is encoded

| |--- kinect

| |--- color # Registered color images

| |--- depth # Registered depth image