VRBiom

Description

The VRBiom (Virtual Reality Dataset for Biometric Applications) dataset has been acquired using a head-mounted display (HMD) to benchmark and develop various biometric use-cases such as iris and periocular recognition and associated sub-tasks such as detection and semantic segmentation. The VRBiom dataset consists of 900 short videos acquired from 25 individuals recorded in the NIR spectrum. To encompass real-world variations, the dataset includes recordings under three gaze conditions: steady, moving, and partially closed eyes. Additionally, it has maintained an equal split of recordings without and with glasses to facilitate the analysis of eyewear. These videos, characterized by non-frontal views of the eye and relatively low spatial resolutions (400 × 400). The dataset also includes 1104 presentation attacks constructed from 92 PA instruments. These PAIs fall into six categories constructed through combinations of print attacks (real and synthetic identities), fake 3D eyeballs, plastic eyes, and various types of masks and mannequins.

Reference

If you use this dataset, please cite the following publication(s) depending on the use:

@article{vrbiom_dataset_arxiv2024,

author = {Kotwal, Ketan and Ulucan, Ibrahim and \”{O}zbulak, G\”{o}khan and Selliah, Janani and Marcel, S\'{e}bastien},

title = {VRBiom: A New Periocular Dataset for Biometric Applications of HMD},

year = {2024},

month = {Jul},

journal = {arXiv preprint arXiv:2407.02150},

DOI = {https://doi.org/10.48550/arXiv.2407.02150}

}

@inproceedings{vrbiom_pad_ijcb2024,

author = {Kotwal, Ketan and \”{O}zbulak, G\”{o}khan and Marcel, S\'{e}bastien},

title = {Assessing the Reliability of Biometric Authentication on Virtual Reality Devices},

booktitle = {Proceedings of IEEE International Joint Conference on Biometrics (IJCB2024)},

month = {Sep},

year = {2024}

}

Data collection

The VRBiom dataset consists of nearly 2000 iris/periocular videos captured using cameras integrated into the Meta Quest Pro headset. This dataset includes 900 bona-fide videos from 25 subjects and 1104 PA videos. The process of acquiring both bona-fide and PA data recordings:

1. bona-fide Recordings:

A total of 25 subjects, aged between 18 and 50 and representing a diverse range of skin tones and eye colors, participated in the data collection process. The recordings were divided into two sub-sessions: the first without the subject wearing glasses and the second with glasses. For each sub-session, three recordings were captured for each of the following gaze variations:

- Steady Gaze: The subject maintains a nearly fixed gaze position by fixating their eyes on a specific (virtual) object.

- Moving Gaze: The subject’s gaze moves freely across the scene.

- Partially Closed Eyes: The subject keeps their eyes partially closed without focusing on any particular gaze.

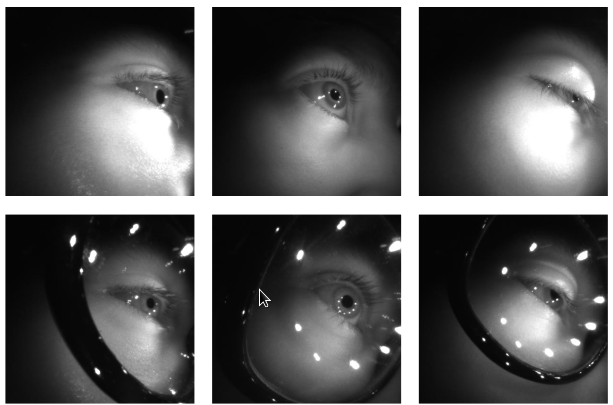

Figure 1. Samples of bona-fide recordings from the VRBiom dataset. Each row presents a sample of steady gaze, moving gaze, and partially closed eyes (from left to right). Top and bottom rows refer to recordings without and with glasses, respectively.

2. PA Recordings:

Most of the PAs were constructed by combining two categories of attack instruments:

- the eyes region

- the periocular region

For eyes, a variety of instruments including fake 3D eyes (eyeballs), printouts from synthetic and real identities, and plastic-made synthetic eyes were used to construct attacks. For the periocular region, mannequins and 3D masks made of different materials were employed.

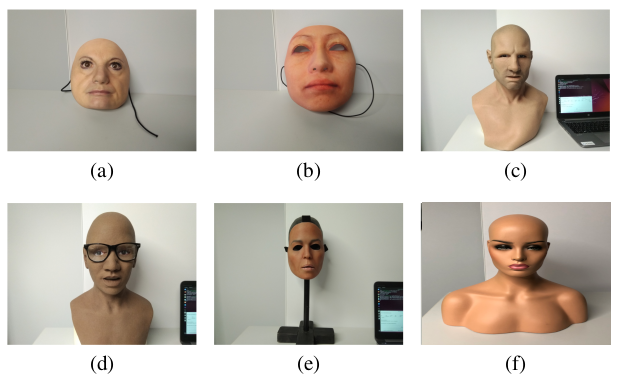

Figure 2. PA instruments used to construct attacks in the VRBiom: (a) Rigid masks with own eyes, (b) rigid masks with fake eyeballs, (c) flex masks with print attacks, (d) flex masks with print attacks, (e) flex masks with fake eyeballs, and (f) mannequins.

Structure of the Dataset

Each presentation is recorded in a separate file in *.avi format. The recording files have the following directory-structure:

/data /data/<subject_id> /data/<subject_id>/<record>.avi

Overall Statistics:

Table 1 summarizes the details of the VRBiom dataset. It also provides the naming conventions used to indicate the type of PAI.

| Type | Series | Subtype | #Identities | #Videos | Attack Types |

|---|---|---|---|---|---|

| bona-fide | 0xx | [steady gaze, moving gaze, partially closed] × [glass, no glass] | 25 | 900 | - |

| Presentation Attacks | 2xx | Mannequins | 7 | 84 | Own eyes (same material) |

| 3xx | Custom rigid mask (type I) | 10 | 120 | Own eyes (same material) | |

| 4xx | Custom rigid mask (type II) | 14 | 168 | Fake 3D eyeballs | |

| 5xx | Generic flexible masks | 20 | 240 | Print attacks (synthetic data) | |

| 6xx | Custom silicone masks | 16 | 192 | Fake 3D eyeballs | |

| 7xx | Print attacks | 25 | 300 | Print attacks (real data) | |

| Total | 25 + 92 | 2004 |

Table 1: Details of bona-fide and different types of PAs from the VRBiom dataset. Each video was recorded at 72 FPS for approximately 10s.

The dataset is stored in *.avi format. The naming convention consisted of the following fields: Type (bona-fide or PA), subject ID (or PA ID), Eye (left or right), glasses (without or with), type of gaze (Steady, Moving, or Partially closed), claimed ID (for PAs), details of PAI (for PAs), recording ID, and a random suffix. The filename has the following format:

<type>_<subject-id>_<eyes--glasses--gaze>_<subject-id>_<claimed-id>_<transaction>_<random-suffix>.avi

Some examples:

- for bona-fide → “BF_001_L00_001_00_1_9f15e6d6.avi”

- for Presentation Attack → “PA_201_L00_201_22_1_ss43yhvk.avi”

A description of each field in Table 2 for the naming convention is shown below:

| Nu. | Component | Length | Description |

|---|---|---|---|

| 1 | type |

2 char | BF or PA: string indicating whether the corresponding sample is bona-fide or PA. |

| 2 | subject-id |

3 digits | The first digit indicates a series. (see Table 1 for the details of attack series). |

| 3 | eyes--glasses--gaze |

1 char + 2 digits | 1 Char: L or R: specifies left or right eyes. 2 Digits: first digit (0 or 1) indicates with or without glasses (0:without glasses, 1: with glasses), second digit (0, 1, or 2) shows type of gaze (0: Steady, 1: Moving, 2: Partially closed) |

| 4 | subject-id |

3 digits | Same as the second component, which provides the unique number to each bona-fide and presentation attack instrument. |

| 5 | claimed-id |

2 digits | 2 digits indicate the type of PAs: - 1st digit explains the type of PAI Eyes (0: no PA, 1: printed eyes, 2: 3D eyes/eyeballs), - 2nd digit shows the type of PAI Mask (0: no PA, 1: rigid mask, 2: flexible mask). For example, claimed-id=22 indicates the mannequins, which means 3D eyes and rigid, claimed-id=00 indicates bona-fide subjects, respectively. |

| 6 | transaction |

1 digit | Each recording was repeated 3 times. This number indicates the serial number of recording. |

| 7 | random-suffix |

8 digits | An arbitrary numeric string. This string helps to distinguish each presentation uniquely. |

Table 2: The naming convention.

Notes:

| Series | Records | File names | Reason |

|---|---|---|---|

| 0xx |

|

|

The entire eye area is not covered. Nearly 60% of the area of interest covered. |

| 7xx |

|

|

|

| 3xx |

|

|

Strong reflection at the beginning of the record. |

Table 3: Notes on Recordings.