Python API¶

This section includes information for using the pure Python API of bob.ip.optflow.hornschunck.

- bob.ip.optflow.hornschunck.get_config()[source]¶

Returns a string containing the configuration information.

- class bob.ip.optflow.hornschunck.CentralGradient¶

Bases: object

Computes the spatio-temporal gradient using a 3-term approximation

This class computes the spatio-temporal gradient using a 3-term approximation composed of 2 separable kernels (one for the diference term and another one for the averaging term).

Constructor Documentation:

bob.ip.optflow.hornschunck.CentralGradient (difference, average, (height, width))

Constructor

We initialize with the shape of the images we need to treat and with the kernels to be applied. The shape is used by the internal buffers.

Parameters:

difference : array-like, 1D float64

The kernel that contains the difference operation. Typically, this is [1, 0, -1]. Note the kernel is mirrored during the convolution operation. To obtain a [-1, 0, +1] sliding operator, specify [+1, 0, -1]. This kernel must have a shape = (3,).average : array-like, 1D float64

The kernel that contains the spatial averaging operation. This kernel is typically [+1, +1, +1]. This kernel must have a shape = (3,).(height, width) : tuple

the height and width of images to be fed into the the gradient estimatorClass Members:

- average¶

array-like, 1D float64 <– The kernel that contains the average operation. Typically, this is [1, -1]. Note the kernel is mirrored during the convolution operation. To obtain a [-1, +1] sliding operator, specify [+1, -1]. This kernel must have a shape = (2,).

- difference¶

array-like, 1D float64 <– The kernel that contains the difference operation. Typically, this is [1, 0, -1]. Note the kernel is mirrored during the convolution operation. To obtain a [-1, 0, +1] sliding operator, specify [+1, 0, -1]. This kernel must have a shape = (3,).

- evaluate(image1, image2, image3[, ex, ey, et]) → ex, ey, et¶

Evaluates the spatio-temporal gradient from the input image tripplet

Parameters:

image1, image2, image3 : array-like (2D, float64)

Sequence of images to evaluate the gradient from. All images should have the same shape, which should match that of this functor. The gradient is evaluated w.r.t. the image in the center of the tripplet.ex, ey, et : array (2D, float64)

The evaluated gradients in the horizontal, vertical and time directions (respectively) will be output in these variables, which should have dimensions matching those of this functor. If you don’t provide arrays for ex, ey and et, then they will be allocated internally and returned. You must either provide neither ex, ey and et or all, otherwise an exception will be raised.Returns:

ex, ey, et : array (2D, float64)

The evaluated gradients are returned by this function. Each matrix will have a shape that matches the input images.

- shape¶

tuple <– The shape pre-configured for this gradient estimator: (height, width)

- class bob.ip.optflow.hornschunck.Flow¶

Bases: object

Estimates the Optical Flow between images.

This is a clone of the Vanilla Horn & Schunck method that uses a Sobel gradient estimator instead of the forward estimator used by the classical method. The Laplacian operator is also replaced with a more common implementation. The Sobel filter requires 3 images for the gradient estimation. Therefore, this implementation inputs 3 image sets instead of just 2. The flow is calculated w.r.t. central image.

For more details on the general technique from Horn & Schunck, see the module’s documentation.

Constructor Documentation:

bob.ip.optflow.hornschunck.Flow ((height, width))

Initializes the functor with the sizes of images to be treated.

Parameters:

(height, width) : tuple

the height and width of images to be fed into the the flow estimatorClass Members:

- estimate(alpha, iterations, image1, image2, image3[, u, v]) → u, v¶

Estimates the optical flow leading to image2. This method will use leading image image1 and the after image image3, to estimate the optical flow leading to image2. All input images should be 2D 64-bit float arrays with the shape (height, width) as specified in the construction of the object.

Parameters:

alpha : float

The weighting factor between brightness constness and the field smoothness. According to original paper, should be

more or less set to noise in estimating

should be

more or less set to noise in estimating  . In

practice, many algorithms consider values around 200 a good

default. The higher this number is, the more importance on

smoothing you will be putting.

. In

practice, many algorithms consider values around 200 a good

default. The higher this number is, the more importance on

smoothing you will be putting.iterations : int

Number of iterations for which to minimize the flow errorimage1, image2, image3 : array-like (2D, float64)

Sequence of images to estimate the flow fromu, v : array (2D, float64)

The estimated flows in the horizontal and vertical directions (respectively) will be output in these variables, which should have dimensions matching those of this functor. If you don’t provide arrays for u and v, then they will be allocated internally and returned. You must either provide neither u and v or both, otherwise an exception will be raised. Notice that, if you provide u and v which are non-zero, they will be taken as initial values for the error minimization. These arrays will be updated with the final value of the flow leading to image2.Returns:

u, v : array (2D, float)

The estimated flows in the horizontal and vertical directions (respectively).

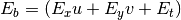

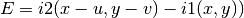

- eval_eb(image1, image2, image3, u, v) → error¶

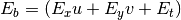

Calculates the brightness error (

) as defined in the paper:

) as defined in the paper:

Parameters:

image1, image2, image3 : array-like (2D, float64)

Sequence of images the flow was estimated withu, v : array-like (2D, float64)

The estimated flows in the horizontal and vertical directions (respectively), which should have dimensions matching those of this functor.Returns:

error : array (2D, float)

The evaluated brightness error.

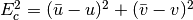

- eval_ec2(u, v) → error¶

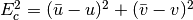

Calculates the square of the smoothness error (

) by using

the formula described in the paper:

) by using

the formula described in the paper:  . Sets the input matrix with the discrete values.

. Sets the input matrix with the discrete values.Parameters:

u, v : array-like (2D, float64)

The estimated flows in the horizontal and vertical directions (respectively), which should have dimensions matching those of this functor.Returns:

error : array (2D, float)

The square of the smoothness error.

- shape¶

tuple <– The shape pre-configured for this flow estimator: (height, width)

- class bob.ip.optflow.hornschunck.ForwardGradient¶

Bases: object

Computes the spatio-temporal gradient using a 2-term approximation

This class computes the spatio-temporal gradient using a 2-term approximation composed of 2 separable kernels (one for the diference term and another one for the averaging term).

Constructor Documentation:

bob.ip.optflow.hornschunck.ForwardGradient (difference, average, (height, width))

Constructor

We initialize with the shape of the images we need to treat and with the kernels to be applied. The shape is used by the internal buffers.

Parameters:

difference : array-like, 1D float64

The kernel that contains the difference operation. Typically, this is [1, -1]. Note the kernel is mirrored during the convolution operation. To obtain a [-1, +1] sliding operator, specify [+1, -1]. This kernel must have a shape = (2,).average : array-like, 1D float64

The kernel that contains the spatial averaging operation. This kernel is typically [+1, +1]. This kernel must have a shape = (2,).(height, width) : tuple

the height and width of images to be fed into the the gradient estimatorClass Members:

- average¶

array-like, 1D float64 <– The kernel that contains the average operation. Typically, this is [1, -1]. Note the kernel is mirrored during the convolution operation. To obtain a [-1, +1] sliding operator, specify [+1, -1]. This kernel must have a shape = (2,).

- difference¶

array-like, 1D float64 <– The kernel that contains the difference operation. Typically, this is [1, -1]. Note the kernel is mirrored during the convolution operation. To obtain a [-1, +1] sliding operator, specify [+1, -1]. This kernel must have a shape = (2,).

- evaluate(image1, image2[, ex, ey, et]) → ex, ey, et¶

Evaluates the spatio-temporal gradient from the input image pair

Parameters:

image1, image2 : array-like (2D, float64)

Sequence of images to evaluate the gradient from. Both images should have the same shape, which should match that of this functor.ex, ey, et : array (2D, float64)

The evaluated gradients in the horizontal, vertical and time directions (respectively) will be output in these variables, which should have dimensions matching those of this functor. If you don’t provide arrays for ex, ey and et, then they will be allocated internally and returned. You must either provide neither ex, ey and et or all, otherwise an exception will be raised.Returns:

ex, ey, et : array (2D, float64)

The evaluated gradients are returned by this function. Each matrix will have a shape that matches the input images.

- shape¶

tuple <– The shape pre-configured for this gradient estimator: (height, width)

- class bob.ip.optflow.hornschunck.HornAndSchunckGradient¶

Bases: bob.ip.optflow.hornschunck.ForwardGradient

Computes the spatio-temporal gradient using a 2-term approximation

This class computes the spatio-temporal gradient using the same approximation as the one described by Horn & Schunck in the paper titled ‘Determining Optical Flow’, published in 1981, Artificial Intelligence, * Vol. 17, No. 1-3, pp. 185-203.

This is equivalent to convolving the image sequence with the following (separate) kernels:

![E_x = \frac{1}{4} ([-1,+1]^T * ([+1,+1]*i_1) + [-1,+1]^T *

([+1,+1]*i_2))\\

E_y = \frac{1}{4} ([+1,+1]^T * ([-1,+1]*i_1) + [+1,+1]^T *

([-1,+1]*i_2))\\

E_t = \frac{1}{4} ([+1,+1]^T * ([+1,+1]*i_1) - [+1,+1]^T *

([+1,+1]*i_2))\\](../../../../_images/math/7a269f4791c05be1118608d51e702ec9d8cebfa9.png)

Constructor Documentation:

bob.ip.optflow.hornschunck.HornAndSchunckGradient ((height, width))

Constructor

We initialize with the shape of the images we need to treat. The shape is used by the internal buffers.

The difference kernel for this operator is fixed to

![[+1/4;

-1/4]](../../../../_images/math/8609e32dca45c8041f86878b7ec75b6ca527a0be.png) . The averaging kernel is fixed to

. The averaging kernel is fixed to ![[+1; +1]](../../../../_images/math/217473aff6f55133fc659308500fd51355346dbe.png) .

.Parameters:

(height, width) : tuple

the height and width of images to be fed into the the gradient estimatorClass Members:

- average¶

array-like, 1D float64 <– The kernel that contains the average operation. Typically, this is [1, -1]. Note the kernel is mirrored during the convolution operation. To obtain a [-1, +1] sliding operator, specify [+1, -1]. This kernel must have a shape = (2,).

- difference¶

array-like, 1D float64 <– The kernel that contains the difference operation. Typically, this is [1, -1]. Note the kernel is mirrored during the convolution operation. To obtain a [-1, +1] sliding operator, specify [+1, -1]. This kernel must have a shape = (2,).

- evaluate(image1, image2[, ex, ey, et]) → ex, ey, et¶

Evaluates the spatio-temporal gradient from the input image pair

Parameters:

image1, image2 : array-like (2D, float64)

Sequence of images to evaluate the gradient from. Both images should have the same shape, which should match that of this functor.ex, ey, et : array (2D, float64)

The evaluated gradients in the horizontal, vertical and time directions (respectively) will be output in these variables, which should have dimensions matching those of this functor. If you don’t provide arrays for ex, ey and et, then they will be allocated internally and returned. You must either provide neither ex, ey and et or all, otherwise an exception will be raised.Returns:

ex, ey, et : array (2D, float64)

The evaluated gradients are returned by this function. Each matrix will have a shape that matches the input images.

- shape¶

tuple <– The shape pre-configured for this gradient estimator: (height, width)

- class bob.ip.optflow.hornschunck.IsotropicGradient¶

Bases: bob.ip.optflow.hornschunck.CentralGradient

Computes the spatio-temporal gradient using a Isotropic filter

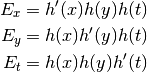

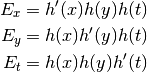

This class computes the spatio-temporal gradient using a 3-D isotropic filter. The gradients are calculated along the x, y and t directions. The Isotropic operator can be decomposed into 2 1D kernels that are applied in sequence. Considering

![h'(\cdot) = [+1, 0, -1]](../../../../_images/math/a8aa50d39fddc1ba241876d929483c3ba1983fd8.png) and

and

![h(\cdot) = [1, \sqrt{2}, 1]](../../../../_images/math/4cc21c9bc23e40ec2bc0b39c530b002a96ab9682.png) one can represent the operations

like this:

one can represent the operations

like this:This is equivalent to convolving the image sequence with the following (separate) kernels:

Constructor Documentation:

bob.ip.optflow.hornschunck.IsotropicGradient ((height, width))

Constructor

We initialize with the shape of the images we need to treat. The shape is used by the internal buffers.

The difference kernel for this operator is fixed to

![[+1, 0,

-1]](../../../../_images/math/509f8ad568be65ca24cac367cf8f49ebde5880fe.png) . The averaging kernel is fixed to

. The averaging kernel is fixed to ![[1, \sqrt{2}, 1]](../../../../_images/math/4b906cf55f3f83d94b70bec710f43d9c09cb2d0f.png) .

.Parameters:

(height, width) : tuple

the height and width of images to be fed into the the gradient estimatorClass Members:

- average¶

array-like, 1D float64 <– The kernel that contains the average operation. Typically, this is [1, -1]. Note the kernel is mirrored during the convolution operation. To obtain a [-1, +1] sliding operator, specify [+1, -1]. This kernel must have a shape = (2,).

- difference¶

array-like, 1D float64 <– The kernel that contains the difference operation. Typically, this is [1, 0, -1]. Note the kernel is mirrored during the convolution operation. To obtain a [-1, 0, +1] sliding operator, specify [+1, 0, -1]. This kernel must have a shape = (3,).

- evaluate(image1, image2, image3[, ex, ey, et]) → ex, ey, et¶

Evaluates the spatio-temporal gradient from the input image tripplet

Parameters:

image1, image2, image3 : array-like (2D, float64)

Sequence of images to evaluate the gradient from. All images should have the same shape, which should match that of this functor. The gradient is evaluated w.r.t. the image in the center of the tripplet.ex, ey, et : array (2D, float64)

The evaluated gradients in the horizontal, vertical and time directions (respectively) will be output in these variables, which should have dimensions matching those of this functor. If you don’t provide arrays for ex, ey and et, then they will be allocated internally and returned. You must either provide neither ex, ey and et or all, otherwise an exception will be raised.Returns:

ex, ey, et : array (2D, float64)

The evaluated gradients are returned by this function. Each matrix will have a shape that matches the input images.

- shape¶

tuple <– The shape pre-configured for this gradient estimator: (height, width)

- class bob.ip.optflow.hornschunck.PrewittGradient¶

Bases: bob.ip.optflow.hornschunck.CentralGradient

Computes the spatio-temporal gradient using a Prewitt filter

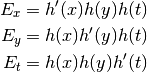

This class computes the spatio-temporal gradient using a 3-D prewitt filter. The gradients are calculated along the x, y and t directions. The Prewitt operator can be decomposed into 2 1D kernels that are applied in sequence. Considering

![h'(\cdot) = [+1, 0, -1]](../../../../_images/math/a8aa50d39fddc1ba241876d929483c3ba1983fd8.png) and

and

![h(\cdot) = [1, 1, 1]](../../../../_images/math/98ea80ce54134b61f940f91880d405a768a4d025.png) one can represent the operations like

this:

one can represent the operations like

this:This is equivalent to convolving the image sequence with the following (separate) kernels:

Constructor Documentation:

bob.ip.optflow.hornschunck.PrewittGradient ((height, width))

Constructor

We initialize with the shape of the images we need to treat. The shape is used by the internal buffers.

The difference kernel for this operator is fixed to

![[+1, 0,

-1]](../../../../_images/math/509f8ad568be65ca24cac367cf8f49ebde5880fe.png) . The averaging kernel is fixed to

. The averaging kernel is fixed to ![[1, 1, 1]](../../../../_images/math/48878b58785669d297343b331aa54485053436f7.png) .

.Parameters:

(height, width) : tuple

the height and width of images to be fed into the the gradient estimatorClass Members:

- average¶

array-like, 1D float64 <– The kernel that contains the average operation. Typically, this is [1, -1]. Note the kernel is mirrored during the convolution operation. To obtain a [-1, +1] sliding operator, specify [+1, -1]. This kernel must have a shape = (2,).

- difference¶

array-like, 1D float64 <– The kernel that contains the difference operation. Typically, this is [1, 0, -1]. Note the kernel is mirrored during the convolution operation. To obtain a [-1, 0, +1] sliding operator, specify [+1, 0, -1]. This kernel must have a shape = (3,).

- evaluate(image1, image2, image3[, ex, ey, et]) → ex, ey, et¶

Evaluates the spatio-temporal gradient from the input image tripplet

Parameters:

image1, image2, image3 : array-like (2D, float64)

Sequence of images to evaluate the gradient from. All images should have the same shape, which should match that of this functor. The gradient is evaluated w.r.t. the image in the center of the tripplet.ex, ey, et : array (2D, float64)

The evaluated gradients in the horizontal, vertical and time directions (respectively) will be output in these variables, which should have dimensions matching those of this functor. If you don’t provide arrays for ex, ey and et, then they will be allocated internally and returned. You must either provide neither ex, ey and et or all, otherwise an exception will be raised.Returns:

ex, ey, et : array (2D, float64)

The evaluated gradients are returned by this function. Each matrix will have a shape that matches the input images.

- shape¶

tuple <– The shape pre-configured for this gradient estimator: (height, width)

- class bob.ip.optflow.hornschunck.SobelGradient¶

Bases: bob.ip.optflow.hornschunck.CentralGradient

Computes the spatio-temporal gradient using a Sobel filter

This class computes the spatio-temporal gradient using a 3-D sobel filter. The gradients are calculated along the x, y and t directions. The Sobel operator can be decomposed into 2 1D kernels that are applied in sequence. Considering

![h'(\cdot) = [+1, 0, -1]](../../../../_images/math/a8aa50d39fddc1ba241876d929483c3ba1983fd8.png) and

and

![h(\cdot) = [1, 2, 1]](../../../../_images/math/473012c9385731f38b2742ba3c0df085030c6d30.png) one can represent the operations like

this:

one can represent the operations like

this:This is equivalent to convolving the image sequence with the following (separate) kernels:

Constructor Documentation:

bob.ip.optflow.hornschunck.SobelGradient ((height, width))

Constructor

We initialize with the shape of the images we need to treat. The shape is used by the internal buffers.

The difference kernel for this operator is fixed to

![[+1, 0,

-1]](../../../../_images/math/509f8ad568be65ca24cac367cf8f49ebde5880fe.png) . The averaging kernel is fixed to

. The averaging kernel is fixed to ![[1, 2, 1]](../../../../_images/math/c2875bf8729479a3452096a15c99d4e7b41feb54.png) .

.Parameters:

(height, width) : tuple

the height and width of images to be fed into the the gradient estimatorClass Members:

- average¶

array-like, 1D float64 <– The kernel that contains the average operation. Typically, this is [1, -1]. Note the kernel is mirrored during the convolution operation. To obtain a [-1, +1] sliding operator, specify [+1, -1]. This kernel must have a shape = (2,).

- difference¶

array-like, 1D float64 <– The kernel that contains the difference operation. Typically, this is [1, 0, -1]. Note the kernel is mirrored during the convolution operation. To obtain a [-1, 0, +1] sliding operator, specify [+1, 0, -1]. This kernel must have a shape = (3,).

- evaluate(image1, image2, image3[, ex, ey, et]) → ex, ey, et¶

Evaluates the spatio-temporal gradient from the input image tripplet

Parameters:

image1, image2, image3 : array-like (2D, float64)

Sequence of images to evaluate the gradient from. All images should have the same shape, which should match that of this functor. The gradient is evaluated w.r.t. the image in the center of the tripplet.ex, ey, et : array (2D, float64)

The evaluated gradients in the horizontal, vertical and time directions (respectively) will be output in these variables, which should have dimensions matching those of this functor. If you don’t provide arrays for ex, ey and et, then they will be allocated internally and returned. You must either provide neither ex, ey and et or all, otherwise an exception will be raised.Returns:

ex, ey, et : array (2D, float64)

The evaluated gradients are returned by this function. Each matrix will have a shape that matches the input images.

- shape¶

tuple <– The shape pre-configured for this gradient estimator: (height, width)

- class bob.ip.optflow.hornschunck.VanillaFlow¶

Bases: object

Estimates the Optical Flow between images.

Estimates the Optical Flow between two sequences of images (image1, the starting image and image2, the final image). It does this using the iterative method described by Horn & Schunck in the paper titled “Determining Optical Flow”, published in 1981, Artificial Intelligence, Vol. 17, No. 1-3, pp. 185-203.

The method constrains the calculation with two assertions that can be made on a natural sequence of images:

- For the same lighting conditions, the brightness (

) of the

shapes in an image do not change and, therefore, the derivative of E

w.r.t. time (

) of the

shapes in an image do not change and, therefore, the derivative of E

w.r.t. time ( ) equals zero.

) equals zero. - The relative velocities of adjancent points in an image varies smoothly. The smothness constraint is applied on the image data using the Laplacian operator.

It then approximates the calculation of conditions 1 and 2 above using a Taylor series expansion and ignoring terms with order greater or equal 2. This technique is also know as “Finite Differences” and is applied in other engineering fields such as Fluid Mechanics.

The problem is finally posed as an iterative process that simultaneouslyminimizes conditions 1 and 2 above. A weighting factor (

- also sometimes referred as

- also sometimes referred as  in some

implementations) controls the relative importance of the two above

conditions. The higher it gets, the smoother the field will be.

in some

implementations) controls the relative importance of the two above

conditions. The higher it gets, the smoother the field will be.Note

OpenCV also has an implementation for H&S optical flow. It sets

This is the set of equations that are implemented:

![u(n+1) = U(n) - E_x[E_x * U(n) + E_y * V(n) + E_t] /

(\alpha^2 + E_x^2 + E_y^2)\\

v(n+1) = V(n) - E_y[E_y * U(n) + E_y * V(n) + E_t] /

(\alpha^2 + E_x^2 + E_y^2)\\](../../../../_images/math/b3a5096ceee17ffd11e04dfc16bbac0154e0cbce.png)

Where:

- is the relative velocity in the

direction

direction

- is the relative velocity in the

direction

direction  ,

,  and

and

- are partial derivatives of brightness in the

,

,  and

and

directions, estimated using finite differences based on the

input images, i1 and i2

directions, estimated using finite differences based on the

input images, i1 and i2

- laplacian estimates for x given equations in Section 8 of the paper

- laplacian estimates for y given equations in Section 8 of the paper

According to paper,

should be more or less set to

noise in estimating

should be more or less set to

noise in estimating  . In practice, many algorithms

consider values around 200 a good default. The higher this number is,

the more importance on smoothing you will be putting.

. In practice, many algorithms

consider values around 200 a good default. The higher this number is,

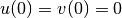

the more importance on smoothing you will be putting.The initial conditions are set such that

,

except in the case where you provide them. For example, if you are

analyzing a video stream, it is a good idea to use the previous

estimate as the initial conditions.

,

except in the case where you provide them. For example, if you are

analyzing a video stream, it is a good idea to use the previous

estimate as the initial conditions.Note

This is a dense flow estimator. The optical flow is computed for all pixels in the image.

Constructor Documentation:

bob.ip.optflow.hornschunck.VanillaFlow ((height, width))

Initializes the functor with the sizes of images to be treated.

Parameters:

(height, width) : tuple

the height and width of images to be fed into the the flow estimatorClass Members:

- estimate(alpha, iterations, image1, image2[, u, v]) → u, v¶

Estimates the optical flow leading to image2. This method will use the leading image image1, to estimate the optical flow leading to image2. All input images should be 2D 64-bit float arrays with the shape (height, width) as specified in the construction of the object.

Parameters:

alpha : float

The weighting factor between brightness constness and the field smoothness. According to original paper, should be

more or less set to noise in estimating

should be

more or less set to noise in estimating  . In

practice, many algorithms consider values around 200 a good

default. The higher this number is, the more importance on

smoothing you will be putting.

. In

practice, many algorithms consider values around 200 a good

default. The higher this number is, the more importance on

smoothing you will be putting.iterations : int

Number of iterations for which to minimize the flow errorimage1, image2 : array-like (2D, float64)

Sequence of images to estimate the flow fromu, v : array (2D, float64)

The estimated flows in the horizontal and vertical directions (respectively) will be output in these variables, which should have dimensions matching those of this functor. If you don’t provide arrays for u and v, then they will be allocated internally and returned. You must either provide neither u and v or both, otherwise an exception will be raised. Notice that, if you provide u and v which are non-zero, they will be taken as initial values for the error minimization. These arrays will be updated with the final value of the flow leading to image2.Returns:

u, v : array (2D, float)

The estimated flows in the horizontal and vertical directions (respectively).

- eval_eb(image1, image2, u, v) → error¶

Calculates the brightness error (

) as defined in the paper:

) as defined in the paper:

Parameters:

image1, image2 : array-like (2D, float64)

Sequence of images the flow was estimated withu, v : array-like (2D, float64)

The estimated flows in the horizontal and vertical directions (respectively), which should have dimensions matching those of this functor.Returns:

error : array (2D, float)

The evaluated brightness error.

- eval_ec2(u, v) → error¶

Calculates the square of the smoothness error (

) by using

the formula described in the paper:

) by using

the formula described in the paper:  . Sets the input matrix with the discrete values.

. Sets the input matrix with the discrete values.Parameters:

u, v : array-like (2D, float64)

The estimated flows in the horizontal and vertical directions (respectively), which should have dimensions matching those of this functor.Returns:

error : array (2D, float)

The square of the smoothness error.

- shape¶

tuple <– The shape pre-configured for this flow estimator: (height, width)

- For the same lighting conditions, the brightness (

- bob.ip.optflow.hornschunck.flow_error(image1, image2, u, v) → E¶

Computes the generalized flow error between two images.

This function calculates the flow error between a pair of images:

Parameters:

image1, image2 : array-like (2D, float64)

Sequence of images the flow was estimated withu, v : array-like (2D, float64)

The estimated flows in the horizontal and vertical directions (respectively), which should have dimensions matching those of image1 and image2.Returns:

E : array (2D, float)

The estimated flow error E.

- bob.ip.optflow.hornschunck.laplacian_avg_hs(input) → output¶

Filters the input image using the Laplacian (averaging) operator.

An approximation to the Laplacian operator. Using the following (non-separable) kernel:

![k = \begin{bmatrix}

-1 & -2 & -1\\[1em]

-2 & 12 & -2\\[1em]

-1 & -2 & -1\\

\end{bmatrix}](../../../../_images/math/42c3bd636474696e07a8d27e7d48b6865fbc690b.png)

This is the one used on the Horn & Schunck paper. To calculate the

value we must remove the central mean and multiply by

value we must remove the central mean and multiply by

, yielding:

, yielding:![k = \begin{bmatrix}

\frac{1}{12} & \frac{1}{6} & \frac{1}{12}\\[0.3em]

\frac{1}{6} & 0 & \frac{1}{6}\\[0.3em]

\frac{1}{12} & \frac{1}{6} & \frac{1}{12}\\

\end{bmatrix}](../../../../_images/math/171fe38526f0cbe5fd107302db4d4dd1d5d11d8e.png)

Note

You will get the wrong results if you use the Laplacian kernel directly.

Parameters:

input : array-like (2D, float64)

The 2D array to which you’d like to apply the laplacian operator.Returns:

output : array (2D, float)

The result of applying the laplacian operator on input.

- bob.ip.optflow.hornschunck.laplacian_avg_hs_opencv(input) → output¶

Filters the input image using the Laplacian (averaging) operator.

An approximation to the Laplacian operator. Using the following (non-separable) kernel:

![k = \begin{bmatrix}

0 & -1 & 0\\[1em]

-1 & 4 & -1\\[1em]

0 & -1 & -0\\

\end{bmatrix}](../../../../_images/math/6684a322c4af57858acc1e963217c83738b9db89.png)

This is used as Laplacian operator in OpenCV. To calculate the

value we must remove the central mean and multiply by

value we must remove the central mean and multiply by

, yielding:

, yielding:![k = \begin{bmatrix}

0 & \frac{1}{4} & 0 \\[0.3em]

\frac{1}{4} & 0 & \frac{1}{4}\\[0.3em]

0 & \frac{1}{4} & 0 \\

\end{bmatrix}](../../../../_images/math/8258ad4eb435544cd3bb500924c14d2b27649f77.png)

Note

You will get the wrong results if you use the Laplacian kernel directly.

Parameters:

input : array-like (2D, float64)

The 2D array to which you’d like to apply the laplacian operator.Returns:

output : array (2D, float)

The result of applying the laplacian operator on input.