Idiap at NeurIPS 2020

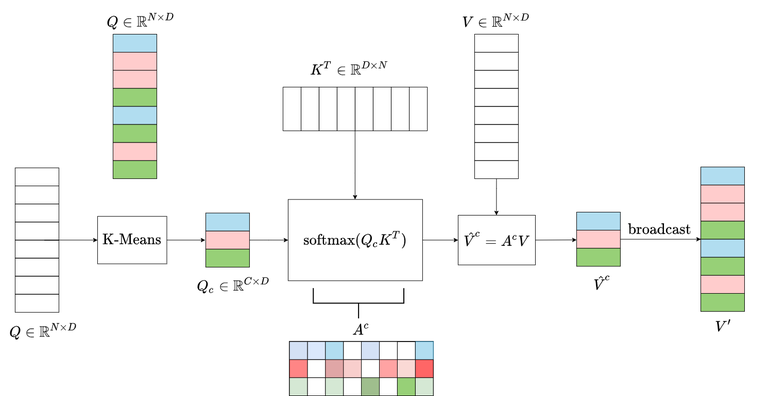

Transformers have recently gained a lot of popularity after establishing a new state-of-the-art on number of applications dealing with text, images and speech data. However, computing the attention matrix, which is their key component, has quadratic complexity with respect to the sequence length, thus making them prohibitively expensive for large sequences. To address this limitation, clustered attention approximates the true attention by grouping queries into clusters and computing attention using the query centroids. This approximation is further improved by recomputing attention for each query on a small number of important keys. For any query, the set of keys with highest clustered attention weights with the corresponding centroid forms a good candidate.

More information including code is available on the project page: https://clustered-transformers.github.io/